In the rapidly evolving world of artificial intelligence (AI) development services, securing AI models is of paramount importance. As AI continues to find applications in diverse fields, ensuring the robustness and reliability of AI models is essential for safeguarding sensitive data, maintaining user trust, and preventing malicious exploitation. This article explores essential techniques to secure AI models, enabling organizations to offer reliable AI development services.

1. Data Privacy and Encryption

- Protecting sensitive data is the cornerstone of AI model security. Implement robust encryption protocols to safeguard data during transmission and storage.

- Utilize techniques such as differential privacy to add noise to the data, preventing the possibility of reverse engineering and identifying specific individuals from the dataset.

2. Model Architecture

- Begin with a secure and well-designed model architecture. Regularly update and improve it to address potential vulnerabilities.

- Employ techniques like model pruning and quantization to reduce the model’s size and minimize attack surfaces.

3. Input Validation and Sanitization

- Validate and sanitize all inputs to the AI model to prevent common attacks such as injection and cross-site scripting.

- Implement input validation mechanisms that reject malicious inputs and ensure only valid data is processed.

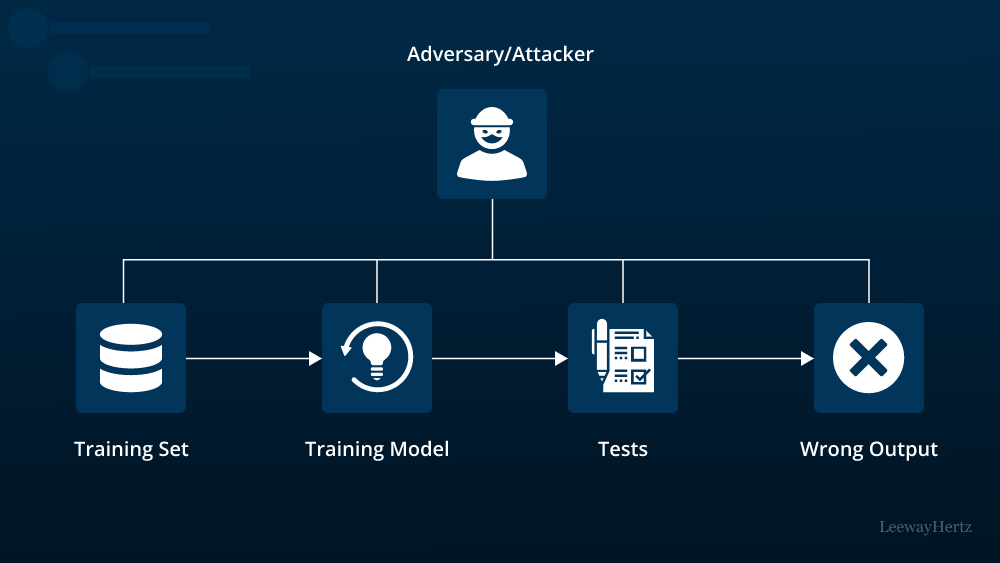

4. Adversarial Defense

- Adversarial attacks exploit vulnerabilities in AI models by introducing slight changes to inputs, causing the model to misclassify.

- Use adversarial defense techniques, such as adversarial training and robust optimization, to improve the model’s resilience against such attacks.

5. Model Transparency and Explainability

- Black-box AI models can be challenging to secure, as attackers may exploit hidden vulnerabilities.

- Embrace explainable AI techniques to understand model decisions and identify potential risks, making it easier to address and rectify vulnerabilities.

6. Continuous Monitoring

- Deploy AI model monitoring tools that can detect unusual behavior and potential attacks in real-time.

- Implement logging and auditing mechanisms to track model usage and identify any suspicious activities.

7. Secure APIs and Endpoints

- Ensure the security of APIs and endpoints through authentication, access controls, and encryption to prevent unauthorized access.

- Utilize rate limiting to protect against brute force and denial-of-service attacks.

8. Regular Updates and Patch Management

- Keep the AI model and all related components up-to-date with the latest security patches and fixes.

- Establish a systematic process for regular updates and monitoring for security vulnerabilities.

9. Model Watermarking and Intellectual Property Protection

- Implement watermarking techniques to embed ownership information into the AI model, making it harder for attackers to steal or misuse the model.

- Explore intellectual property protection measures to safeguard the AI model’s code and design.

10. Secure Model Deployment

- Secure the AI model deployment process by utilizing containerization and virtualization technologies.

- Isolate the AI model from the rest of the system to limit potential damages in case of a breach.

Conclusion

AI development services hold immense potential in shaping the future of various industries, but their security must remain a top priority. By implementing a robust security strategy, organizations can ensure the integrity and confidentiality of AI models. The techniques mentioned in this article provide a strong foundation for enhancing the security of AI models, safeguarding sensitive data, and maintaining user trust in an ever-evolving technological landscape. As AI continues to advance, it is crucial to adapt and reinforce security practices to stay one step ahead of potential threats and attacks.

To Learn More:- https://www.leewayhertz.com/ai-model-security/