In recent years, Transformer models have revolutionized the field of natural language processing (NLP) by achieving impressive results in various tasks such as language translation, text generation, and sentiment analysis. One fascinating extension of the traditional Transformer architecture is the Action Transformer model. Developed as an innovative approach to incorporate dynamic actions into the language modeling process, the Action Transformer introduces a new dimension to contextual understanding and decision-making.

Understanding Transformers: A Brief Overview

Before delving into the workings of the Action Transformer model, let’s briefly review the fundamentals of Transformers. Originally introduced by Vaswani et al. in 2017, Transformers leverage self-attention mechanisms to process input sequences. This allows the model to weigh the importance of different words in the context of the entire sentence, enabling better long-range dependencies and contextual understanding.

The core of a Transformer consists of an encoder-decoder architecture. The encoder processes input sequences and generates contextualized embeddings, while the decoder utilizes those embeddings to generate the desired output.

Introducing Action Transformers

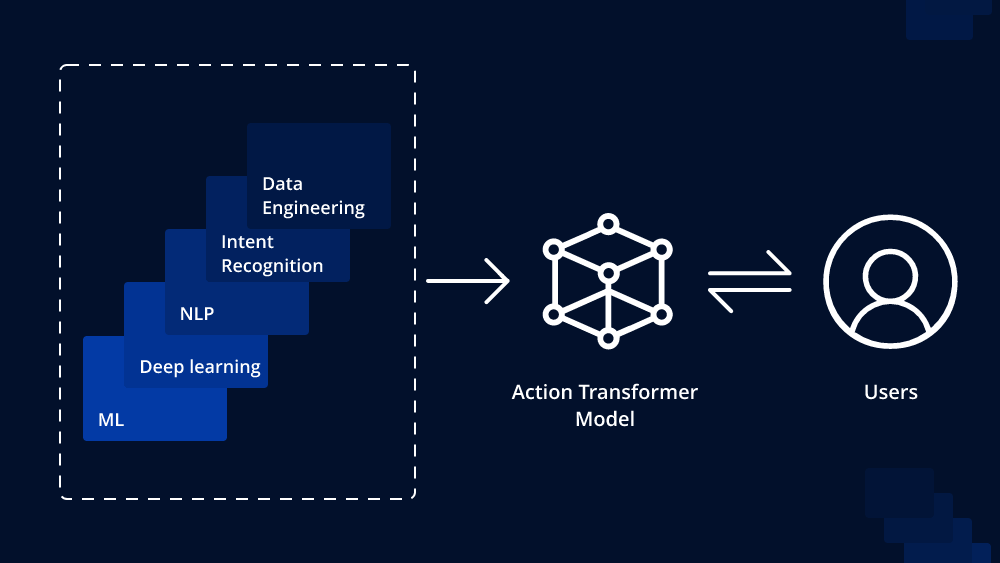

While standard Transformers excel at capturing context, they lack explicit mechanisms for incorporating actions or dynamic decision-making. Action Transformers address this limitation by extending the Transformer with action-specific components, making it suitable for tasks that involve sequential decision-making or action-based outcomes.

Action Embeddings

The Action Transformer introduces action embeddings, which are representations of specific actions associated with the input sequence. These action embeddings are learned during the training process and are combined with word embeddings to provide a more comprehensive understanding of the context.

For example, in a machine translation scenario, the action embeddings could represent the actions of “translate,” “copy,” or “ignore” for each word in the source sentence. This additional information helps the model make informed decisions during the translation process, leading to more accurate and contextually appropriate translations.

Action Mechanism

The Action Transformer incorporates an action mechanism that enables the model to dynamically adjust its behavior based on the given action embeddings. This mechanism involves incorporating action information at different stages of the Transformer’s processing.

During self-attention, the model pays attention not only to words in the input sequence but also to the associated action embeddings. This dual attention allows the model to weigh both words and actions when generating contextualized embeddings, resulting in a more comprehensive understanding of the context.

Dynamic Decision-making

One of the primary advantages of the Action Transformer is its ability to perform dynamic decision-making at each step of the sequence. Traditional Transformers generate output based solely on the input context, but Action Transformers use the additional action information to determine appropriate actions to take during inference.

Continuing with the machine translation example, the Action Transformer can decide to translate a word, copy it as-is from the source, or ignore it entirely based on the specific action embeddings provided. This enables the model to handle out-of-vocabulary words, improve translation accuracy, and handle various translation scenarios more effectively.

Applications of Action Transformers

Action Transformers have shown significant promise in various real-world applications. Some notable uses include:

- Machine Translation: As mentioned earlier, Action Transformers enhance the translation process by dynamically deciding how to handle each word based on specific translation actions.

- Dialogue Generation: In conversational AI systems, Action Transformers can generate responses while considering the conversational context and action instructions like “clarify,” “elaborate,” or “summarize.”

- Image Captioning: When describing images, Action Transformers can determine whether to focus on specific objects, attributes, or relationships in the scene, leading to more informative captions.

Conclusion

The Action Transformer model represents an exciting advancement in the field of NLP, combining the power of the Transformer architecture with dynamic decision-making capabilities. By incorporating action embeddings and mechanisms, the model achieves more contextually relevant outputs, making it a valuable asset for various tasks involving sequential actions and dynamic contexts. As research in this area progresses, we can expect to see even more sophisticated applications and improved performance in a wide range of NLP domains.

To Learn More:- https://www.leewayhertz.com/action-transformer-model/