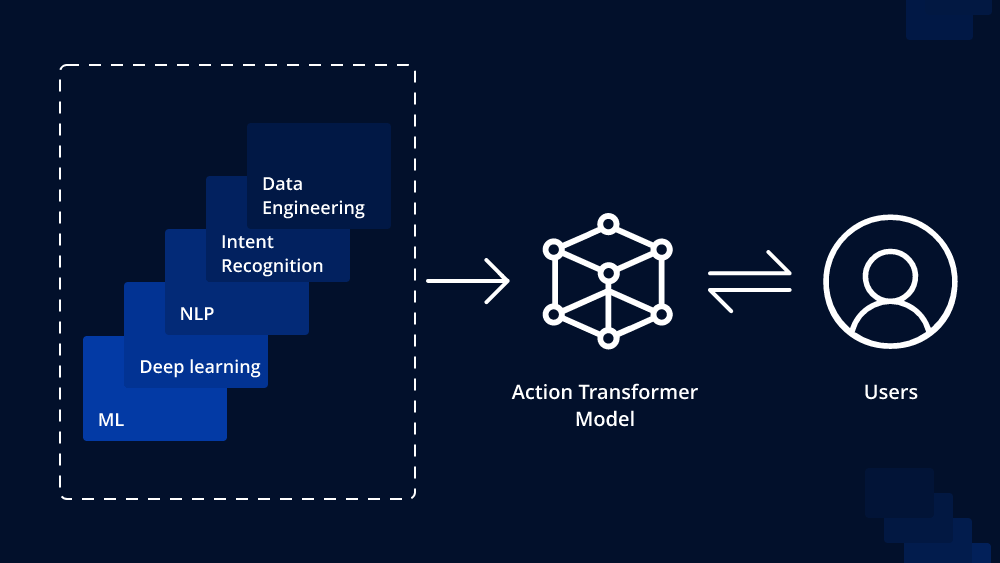

The field of natural language processing (NLP) has witnessed significant advancements in recent years, and one of the breakthroughs in this domain is the Action Transformer Model. An Action Transformer is an extension of the traditional Transformer model, which has proven to be highly effective in various NLP tasks such as language translation, text summarization, and sentiment analysis. The Action Transformer takes this one step further by incorporating explicit reasoning and decision-making capabilities, making it particularly useful for tasks that involve multi-step actions or dynamic environments.

Understanding the Action Transformer Model

The Action Transformer Model is built upon the principles of the original Transformer architecture, which employs self-attention mechanisms to capture contextual information from input sequences. The primary difference lies in the incorporation of an “action space,” which allows the model to perform explicit reasoning steps and generate dynamic sequences of actions.

In traditional Transformers, the model operates on a fixed-length input sequence and generates a fixed-length output sequence. In contrast, the Action Transformer deals with sequences of variable lengths, allowing it to model dynamic processes and decision-making in a more flexible manner.

Key Components of an Action Transformer

- Input Representation: Just like in the original Transformer, the input sequence is first embedded into continuous vector representations using word embeddings. These embeddings serve as the initial input to the model.

- Action Space: The action space is a set of possible actions that the model can take at each step. These actions can be predefined or learned during the training process. The model generates a probability distribution over the action space at each decoding step, allowing it to make informed decisions.

- Action Decoder: The Action Transformer incorporates an action decoder that takes into account the action space probabilities and generates the next action. This decoder, coupled with the attention mechanism, enables the model to dynamically adapt its behavior based on the input and previous actions.

- Reinforcement Learning: To train an Action Transformer, reinforcement learning techniques are often employed. The model is trained to maximize a reward signal, which is provided based on the success of its generated actions in achieving the desired task.

Implementing an Action Transformer

Implementing an Action Transformer can be a complex task, but we can outline the general steps involved:

- Data Preprocessing: Prepare the dataset suitable for the task at hand, along with corresponding action spaces and rewards. Ensure that the input sequences and actions are appropriately tokenized.

- Architecture Design: Define the architecture of the Action Transformer, including the number of layers, attention mechanisms, and action decoder.

- Model Training: Train the model using a suitable optimization algorithm and reinforcement learning techniques. This involves updating the model’s parameters based on the reward signals received during the training process.

- Evaluation: Evaluate the model’s performance on a separate validation dataset. Fine-tune hyperparameters and make necessary adjustments to improve the model’s performance.

- Inference: Once the model is trained, it can be used for inference on new input sequences to generate desired action sequences.

Applications of Action Transformer

The Action Transformer Model finds applications in various fields, including:

- Dialogue Systems: Building chatbots or virtual assistants that can engage in dynamic and contextually relevant conversations.

- Robotics: Enabling robots to interact with their environments and perform multi-step tasks based on natural language commands.

- Gaming: Creating intelligent agents in video games that can adapt their actions based on the game state and user instructions.

- Process Automation: Automating complex tasks in business processes by breaking them down into actionable steps.

Conclusion

The Action Transformer Model represents a significant advancement in the field of natural language processing, incorporating explicit reasoning and decision-making capabilities. By extending the capabilities of the original Transformer architecture, the Action Transformer opens up new possibilities for addressing complex and dynamic NLP tasks. While implementing an Action Transformer can be challenging, the potential benefits in various applications make it a promising area of research and development. As NLP continues to evolve, we can expect the Action Transformer to play a crucial role in enabling machines to understand and generate human-like actions in response to natural language input.

To Learn More:- https://www.leewayhertz.com/action-transformer-model/